If you own a Western Digital My Cloud Home, you might have noticed that the product support end-of-life has long passed (mid 2023) and you got here because you want an alternative to and keep using it safely.

How about having full control of your device? Here are some reasons/user cases:

- It no longer receives firmware updates or security patches, leaving your device vulnerable to potential security risks. Have you seen the amount and severity of known vulnerabilities associated with this product?

- Its hard drive has died and a new image needs to be written to the new drive before powering it back on with a new HDD or a SSD.

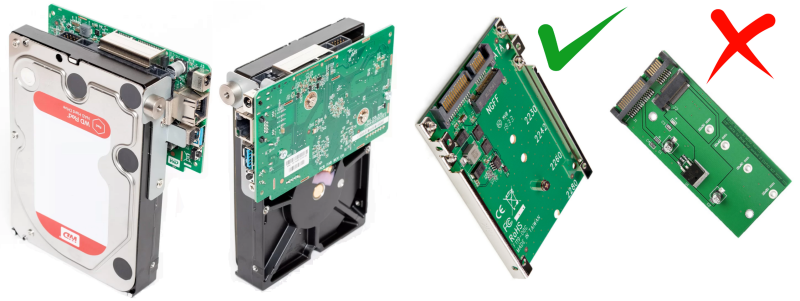

- Note: it may require an adapter because the 3.5 HDD mounting holes are used to keep the PCB attached to the case:

- Note: I never found information that anyone EVER successfully replaced the drive. Please reach out if you got it working.

- Note: it may require an adapter because the 3.5 HDD mounting holes are used to keep the PCB attached to the case:

- Or as a way to take full control of your device and secure it against such risks with Debian. There are lots of basic features that WD will never support, such as:

- Use a secondary network adapter or additional storage capacity via USB 3,

- SSH access to run or schedule tasks,

- Install virtually any application (host a website, active directory, share a printer, etc)

So, if you are looking for a way to keep your device secure and up-to-date, using a clean installation of Debian 11 (built in March 2023) follow along.

INSTALLATION PROCEDURE

Preparation

- Backup your data from the NAS somewhere else (at your own risk),

- Download the image file [Link] and decompress it (feel free to thoroughly inspect the image before using it),

- Format an 8+ GB USB drive as FAT32 on MBR,

- Copy the content of the decompressed image to the drive.

Execution

- Power off the NAS,

- Insert the USB drive,

- Press and hold the Reset button (right above the USB port),

- Keep pressing the button while powering it on and keep pressed until the light is ON (about 30 seconds),

- With the button released, keep pinging the NAS and watch for it to stop responding and start responding again (it means it rebooted),

- Remove the USB drive.

FIRST ACCESS

ssh root@IP

Search for the IP auto-assigned to the NAS by looking at the DHCP server, doing an ARP scan, port (22) scan, etc.

The default password is password – Change it immediately!

Do all the basic hygiene to the system: create your own account, give it sudo privileges, disable SSH as root, enforce usage of SSH Keys, etc.

Then start checking for additional updates.

sudo apt update sudo apt upgrade -y sudo apt --purge autoremove -y sudo apt autoclean -y sudo apt dist-upgrade -y sudo apt --purge autoremove -y sudo apt autoclean -y

Always monitor the mounted partitions df -h because they are small and might get full easily. A work around it using symbolic links to move directories to a bigger partition (/srv/dev-sataa24/).

UNDERSTANDING THE NEW SYSTEM

The single bay 2 TB version that I have (also available in higher capacities) has the following hardware specifications:

- Storage capacity: WD RED 3.5 HDD 2TB (usable < 1.8 TB)

- Processor: ARM Cortex-A53 Quad-Core 1.4 GHz 64-Bit

- Memory: 1GB DDR3 (usable 730 MB)

- Network interface: Gigabit Ethernet

- USB port: One USB 3.0 Type-A port on the back.

- Power: ~7W (18W max) in use = About 60 kWh/year.

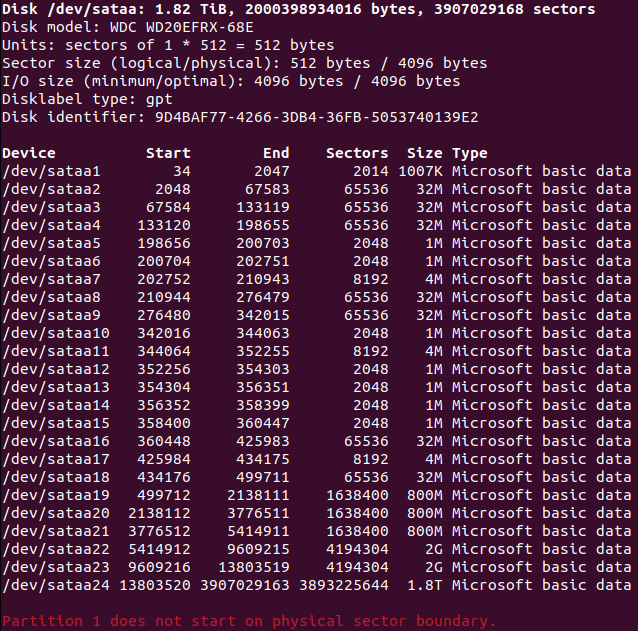

On the hard drive, there are 24 partitions (on GPT), as follows:

-

sataa1tosataa19andsataa23– Not used by the operating system but I would not play with them.sataa20– 775 MB (12% used) – Root of the file system/.sataa21– 775 MB (67% used) – Mounting point/var.sataa22– 2 GB (73% used) – Mounting point/usr.sataa24– 1.8 TB – User storage space mounted on/srv/dev-sataa24/.

Note: it is not recommended to play with the partitions from 1 to 23 because it could mess up the recovery procedure. The last partition can be resized to fit the hardware drive at full capacity.

By inspecting the image that is created on the USB drive one may find the following file structure:

rescue.root.sata.cpio.gz_pad.imgrescue.sata.dtbsata.uImagebluecore.audioomv/20-root.tar.gzends up being copied tosataa20omv/21-var.tar.gzends up being copied tosataa21omv/22-usr.tar.gzends up being copied tosataa22omv/bootConfigomv/fwtable.binomv/rootfs.bin

After the image is pushed to the device its utilization will be as follows:

- ~ 20% of RAM utilization (~140 MB / 730 MB),

- < 5% of CPU utilisation in idle state.

HOW IT WAS BUILT

Kudos to CyberTalk [Link], who originally released an image that overwrites the WD operating system with a Debian 9 with OMV 4 pre-installed.

Goals and Reflections

- OMV (Open Media Vault) version 4 (Arrakis) was released in 2018 and its EOL (End-of-Life) was in 2020.

- Not all repositories were still available at the time this post was written.

- Debian 9 (Stretch) was released in 2017 and its EOL was in 2020.

- There was not enough space to run

apt update.

- There was not enough space to run

- Some use cases will NOT need or want this piece of software installed.

- All the moving pieces related to OMV needed to be stripped from the system before the upgrade.

- Keep it on the bare bones (lightweight) so anyone can install only the necessary applications.

- In the event of a hard drive failure, one can have a full image with all the partition schema ready to write to a new drive and fire it up.

- I don’t know if the partition schema must be preserved (cloned) to the new drive so the firmware is capable of properly boot [Link].

Thanks again to the CyberTalk folks who made the base image, I only cleaned and updated it.

BONUS

- Disable IPv6 if not needed,

- Change the

/etc/fstabto mount the big partition in the desired location instead of/srv/dev-sataa24, - Do not try to install UFW, will work (if you know how to make it work, please ping me). Instead, use

iptablesto create firewall rules. - NFS was broken on the original image (Debian 9) and I could not make it work on any version (if you know how to make it work, please ping me).

- Alternatively, to NFS Server that fails to load, NFS Ganesha will work:

-

sudo apt install nfs-ganesha nfs-ganesha-vfs -y sudo nano /etc/ganesha/ganesha.conf

-

EXPORT { Export_Id = 1; Path = /PATH_TO_SHARE; Pseudo = /SHARE_NAME; Access_Type = RW; Squash = No_Root_Squash; FSAL { Name = VFS; } clients = 192.168.1.0/24; } -

sudo systemctl restart nfs-ganesha sudo systemctl status nfs-ganesha

-

- For local network file share

sambais already out of the box. - For a powerful and secure file share and storage over the Internet, I recommend MinIO, an enterprise-grade AWS S3 compatible server [Link].

- Alternatively, to NFS Server that fails to load, NFS Ganesha will work: